The archive

When you fit a brush estimator, three new attributes are created: best_estimator_, population_, and archive_.

Brush will store the pareto front using validation loss as a list in archive_. This pareto front is always created with individuals from the final population that are not dominated in objectives scorer and complexity. Setting scorer as an objective means optimizing the metric set as scorer: str.

In case you need more flexibility, the population_ will contain the entire final population, and you can iterate through this list to select individuals with different criteria. It is also good to remind that Brush supports different optimization objectives using the argument objectives.

Each element from the archive is a Brush individual that can be serialized (JSON object).

import pandas as pd

from pybrush import BrushClassifier

# load data

df = pd.read_csv('../examples/datasets/d_analcatdata_aids.csv')

X = df.drop(columns='target')

y = df['target']

est = BrushClassifier(

functions=['SplitBest','Add','Mul','Sin','Cos','Exp','Logabs'],

objectives=["scorer", "linear_complexity"],

scorer='balanced_accuracy', # brush implements several metrics for clf and reg!

max_gens=100,

pop_size=100,

max_depth=10,

max_size=100,

verbosity=2,

)

est.fit(X, y)

print("Best model:", est.best_estimator_.get_model())

print('score:', est.score(X,y))

Generation 1/100 [/ ]

Best model on Val:Logistic(Sum(-0.32,If(AIDS>=16068.00,If(AIDS>=20712.00,1.00*Add(1.00,AIDS),1.00*Mul(1.00,AIDS)),If(Total>=1601948.00,1.00*Mul(20712.00*AIDS,AIDS),If(AIDS>=258.00,AIDS,-0.32)))))

Train Loss (Med): 0.77500 (0.56250)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 7 (95)

Median complexity (Max): 992 (921596320)

Time (s): 0.10080

Generation 2/100 [// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 7 (98)

Median complexity (Max): 176 (1657696672)

Time (s): 0.14870

Generation 3/100 [// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (93)

Median complexity (Max): 176 (1304140832)

Time (s): 0.19549

Generation 4/100 [/// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (54)

Median complexity (Max): 176 (12044960)

Time (s): 0.23452

Generation 5/100 [/// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (54)

Median complexity (Max): 176 (12044960)

Time (s): 0.26943

Generation 6/100 [//// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (52)

Median complexity (Max): 176 (12044960)

Time (s): 0.30666

Generation 7/100 [//// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (52)

Median complexity (Max): 176 (12044960)

Time (s): 0.34096

Generation 8/100 [///// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (52)

Median complexity (Max): 176 (11307680)

Time (s): 0.37853

Generation 9/100 [///// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (54)

Median complexity (Max): 176 (11307680)

Time (s): 0.41267

Generation 10/100 [////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (54)

Median complexity (Max): 176 (11307680)

Time (s): 0.45000

Generation 11/100 [////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (52)

Median complexity (Max): 176 (10717856)

Time (s): 0.48708

Generation 12/100 [/////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (50)

Median complexity (Max): 176 (10422944)

Time (s): 0.52469

Generation 13/100 [/////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (50)

Median complexity (Max): 176 (10422944)

Time (s): 0.55745

Generation 14/100 [//////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (50)

Median complexity (Max): 176 (10422944)

Time (s): 0.59163

Generation 15/100 [//////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (49)

Median complexity (Max): 176 (10078880)

Time (s): 0.62577

Generation 16/100 [///////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (49)

Median complexity (Max): 176 (10078880)

Time (s): 0.65858

Generation 17/100 [///////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (49)

Median complexity (Max): 176 (10078880)

Time (s): 0.69383

Generation 18/100 [////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (49)

Median complexity (Max): 176 (10078880)

Time (s): 0.72813

Generation 19/100 [////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (49)

Median complexity (Max): 176 (10078880)

Time (s): 0.76378

Generation 20/100 [/////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (49)

Median complexity (Max): 176 (10078880)

Time (s): 0.80245

Generation 21/100 [/////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (49)

Median complexity (Max): 176 (10078880)

Time (s): 0.83974

Generation 22/100 [//////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (10078112)

Time (s): 0.88074

Generation 23/100 [//////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (10078112)

Time (s): 0.92155

Generation 24/100 [///////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (10078112)

Time (s): 0.95491

Generation 25/100 [///////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (10078112)

Time (s): 0.99640

Generation 26/100 [////////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (5654432)

Time (s): 1.03516

Generation 27/100 [////////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (5654432)

Time (s): 1.07259

Generation 28/100 [/////////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (5654432)

Time (s): 1.11719

Generation 29/100 [/////////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (5654432)

Time (s): 1.15723

Generation 30/100 [//////////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (5654432)

Time (s): 1.19967

Generation 31/100 [//////////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (5654432)

Time (s): 1.24691

Generation 32/100 [///////////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.50000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (5654432)

Time (s): 1.30097

Generation 33/100 [///////////////// ]

Best model on Val:Logistic(Sum(-8.68,0.52*AIDS))

Train Loss (Med): 0.77500 (0.52500)

Val Loss (Med): 0.70000 (0.60000)

Median Size (Max): 5 (47)

Median complexity (Max): 176 (5654432)

Time (s): 1.34932

Generation 34/100 [////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,AIDS,1.00*Cos(Total)))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (56)

Median complexity (Max): 176 (21677984)

Time (s): 1.40049

Generation 35/100 [////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,AIDS,1.00*Cos(Total)))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.55000)

Median Size (Max): 5 (56)

Median complexity (Max): 176 (21677984)

Time (s): 1.45974

Generation 36/100 [/////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,AIDS,1.00*Cos(Total)))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (56)

Median complexity (Max): 176 (21677984)

Time (s): 1.52614

Generation 37/100 [/////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (111)

Median complexity (Max): 176 (1343408032)

Time (s): 1.58717

Generation 38/100 [//////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (104)

Median complexity (Max): 176 (20891552)

Time (s): 1.64133

Generation 39/100 [//////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (106)

Median complexity (Max): 176 (20891552)

Time (s): 1.69622

Generation 40/100 [///////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (106)

Median complexity (Max): 176 (20891552)

Time (s): 1.75234

Generation 41/100 [///////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (106)

Median complexity (Max): 176 (20891552)

Time (s): 1.80336

Generation 42/100 [////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (72)

Median complexity (Max): 176 (20891552)

Time (s): 1.86306

Generation 43/100 [////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (74)

Median complexity (Max): 176 (20891552)

Time (s): 1.90866

Generation 44/100 [/////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (74)

Median complexity (Max): 176 (20891552)

Time (s): 1.95261

Generation 45/100 [/////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (72)

Median complexity (Max): 176 (20891552)

Time (s): 2.01117

Generation 46/100 [//////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (60)

Median complexity (Max): 176 (73582496)

Time (s): 2.06181

Generation 47/100 [//////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (60)

Median complexity (Max): 176 (73582496)

Time (s): 2.13684

Generation 48/100 [///////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (58)

Median complexity (Max): 176 (31115168)

Time (s): 2.18129

Generation 49/100 [///////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,AIDS,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (58)

Median complexity (Max): 176 (31115168)

Time (s): 2.22840

Generation 50/100 [////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,1.00*Cos(1.00*Exp(Total)),Total),If(Total>=1601948.00,1.00,If(AIDS>=258.00,1.00,1.00*Cos(Total)))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (60)

Median complexity (Max): 176 (31115168)

Time (s): 2.28667

Generation 51/100 [////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,Cos(Exp(1.00)),Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (50)

Median complexity (Max): 176 (29640608)

Time (s): 2.46638

Generation 52/100 [/////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,Cos(Exp(1.00)),Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (36)

Median complexity (Max): 176 (4652960)

Time (s): 2.56978

Generation 53/100 [/////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,Cos(Exp(1.00)),Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (36)

Median complexity (Max): 176 (4652960)

Time (s): 2.66823

Generation 54/100 [//////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,Cos(Exp(1.00)),Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (36)

Median complexity (Max): 176 (4652960)

Time (s): 2.78801

Generation 55/100 [//////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,Cos(Exp(1.00)),Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.60000)

Median Size (Max): 5 (36)

Median complexity (Max): 176 (4652960)

Time (s): 2.91201

Generation 56/100 [///////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (2760608)

Time (s): 3.02868

Generation 57/100 [///////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (2760608)

Time (s): 3.08023

Generation 58/100 [////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (2760608)

Time (s): 3.12875

Generation 59/100 [////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (2760608)

Time (s): 3.17882

Generation 60/100 [/////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (2760608)

Time (s): 3.22413

Generation 61/100 [/////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (2760608)

Time (s): 3.27697

Generation 62/100 [//////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (1279904)

Time (s): 3.32183

Generation 63/100 [//////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (1279904)

Time (s): 3.37218

Generation 64/100 [///////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (1279904)

Time (s): 3.42008

Generation 65/100 [///////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (1279904)

Time (s): 3.46696

Generation 66/100 [////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (1279904)

Time (s): 3.51688

Generation 67/100 [////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (1279904)

Time (s): 3.56779

Generation 68/100 [/////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (1279904)

Time (s): 3.61874

Generation 69/100 [/////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.82500 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (31)

Median complexity (Max): 176 (1279904)

Time (s): 3.66529

Generation 70/100 [//////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.75000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (19)

Median complexity (Max): 176 (69536)

Time (s): 3.71265

Generation 71/100 [//////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.75000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (19)

Median complexity (Max): 176 (69536)

Time (s): 3.75228

Generation 72/100 [///////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.75000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (19)

Median complexity (Max): 176 (69536)

Time (s): 3.80158

Generation 73/100 [///////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.75000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (19)

Median complexity (Max): 176 (69536)

Time (s): 3.84654

Generation 74/100 [////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (69536)

Time (s): 3.89059

Generation 75/100 [////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 3.95229

Generation 76/100 [/////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.02272

Generation 77/100 [/////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.08319

Generation 78/100 [//////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.13113

Generation 79/100 [//////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.18934

Generation 80/100 [///////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.24344

Generation 81/100 [///////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.30500

Generation 82/100 [////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.39221

Generation 83/100 [////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.43939

Generation 84/100 [/////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (41)

Median complexity (Max): 176 (473464736)

Time (s): 4.48879

Generation 85/100 [/////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (40)

Median complexity (Max): 176 (1178785696)

Time (s): 4.54237

Generation 86/100 [//////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (40)

Median complexity (Max): 176 (1178785696)

Time (s): 4.61088

Generation 87/100 [//////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (35)

Median complexity (Max): 176 (321050528)

Time (s): 4.69097

Generation 88/100 [///////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (35)

Median complexity (Max): 176 (321050528)

Time (s): 4.75119

Generation 89/100 [///////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (35)

Median complexity (Max): 176 (321050528)

Time (s): 4.80611

Generation 90/100 [////////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (35)

Median complexity (Max): 176 (321050528)

Time (s): 4.86054

Generation 91/100 [////////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (33)

Median complexity (Max): 176 (66246560)

Time (s): 4.92291

Generation 92/100 [/////////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (33)

Median complexity (Max): 176 (66246560)

Time (s): 4.97706

Generation 93/100 [/////////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (30)

Median complexity (Max): 176 (11042720)

Time (s): 5.03965

Generation 94/100 [//////////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (30)

Median complexity (Max): 176 (11042720)

Time (s): 5.08854

Generation 95/100 [//////////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (30)

Median complexity (Max): 176 (11042720)

Time (s): 5.14694

Generation 96/100 [///////////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (30)

Median complexity (Max): 176 (11042720)

Time (s): 5.18979

Generation 97/100 [///////////////////////////////////////////////// ]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (30)

Median complexity (Max): 176 (11042720)

Time (s): 5.23257

Generation 98/100 [//////////////////////////////////////////////////]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (30)

Median complexity (Max): 176 (11042720)

Time (s): 5.27898

Generation 99/100 [//////////////////////////////////////////////////]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (30)

Median complexity (Max): 176 (11042720)

Time (s): 5.34219

Generation 100/100 [//////////////////////////////////////////////////]

Best model on Val:Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

Train Loss (Med): 0.85000 (0.52500)

Val Loss (Med): 0.90000 (0.50000)

Median Size (Max): 5 (30)

Median complexity (Max): 176 (11042720)

Time (s): 5.39780

Best model: Logistic(Sum(0.00,If(AIDS>=16068.00,1.00,1.00*Mul(If(Total>=1601948.00,-0.91,Total),If(AIDS>=258.00,1.00,Cos(Total))))))

score: 0.84

You can see individuals from archive using the index:

print(len(est.archive_))

print( est.archive_[-1].get_model() )

2

Logistic(Sum(-11.58,AIDS))

And you can call predict (or predict_proba, if your est is an instance of BrushClassifier) with individuals from the archive or population. But first you need to wrap the data in a Brush dataset to make feature names match:

from pybrush import Dataset

data = Dataset(X=X, ref_dataset=est.data_,

feature_names=est.feature_names_)

est.archive_[-1].predict(data)

array([ True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, False, True, True, True, True,

False, True, True, True, True])

est.archive_[-1].predict_proba(data)

array([1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

9.9999940e-01, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

3.7768183e-03, 1.0000000e+00, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 1.8871395e-04, 1.0000000e+00, 1.0000000e+00,

1.0000000e+00, 9.1870719e-01], dtype=float32)

Loading a specific model from archive

Use it as if it is a compatible sklearn estimator!

ind_from_arch = est.archive_[-1]

print(ind_from_arch.get_model())

print(ind_from_arch.fitness)

Logistic(Sum(-11.58,AIDS))

Fitness(0.600000 16.000000 )

To use this loaded model to do predictions, you need to wrap the data into a Dataset:

from pybrush import Dataset

data = Dataset(X=X, ref_dataset=est.data_,

feature_names=est.feature_names_)

ind_from_arch.predict(data)

array([ True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, False, True, True, True, True,

False, True, True, True, True])

ind_from_arch.predict(data)

array([ True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, True, True, True, True, True,

True, True, True, True, False, True, True, True, True,

False, True, True, True, True])

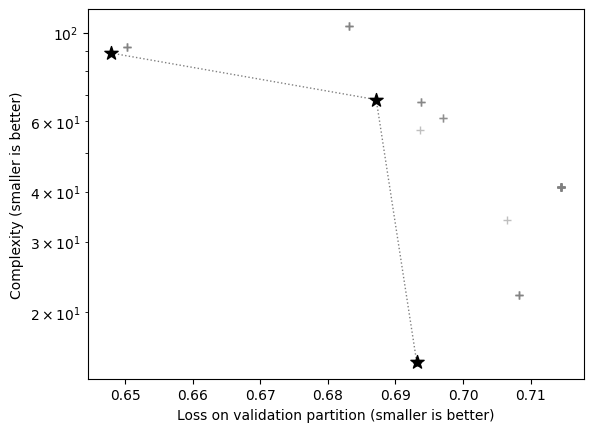

Visualizing the Pareto front of the archive

import matplotlib.pyplot as plt

xs, ys = [], []

for ind in est.archive_:

xs.append(ind.fitness.loss_v)

ys.append(ind.fitness.linear_complexity)

print(len(xs))

plt.scatter(xs, ys, alpha=0.25, c='b', linewidth=1.0)

plt.yscale('log')

plt.xlabel("Loss on validation partition (greater is better)")

plt.ylabel("Complexity (smaller is better)")

2

Text(0, 0.5, 'Complexity (smaller is better)')

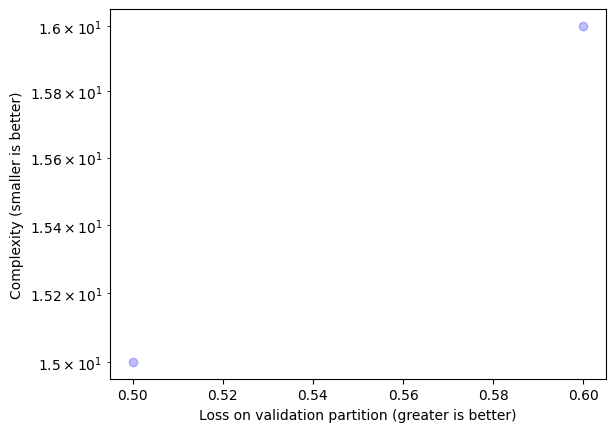

Acessing the entire population (unique individuals)

est = BrushClassifier(

# functions=['SplitBest','Add','Mul','Sin','Cos','Exp','Logabs'],

objectives=["scorer", "linear_complexity"],

max_depth=5,

max_size=75,

max_gens=100,

pop_size=200,

verbosity=1

)

est.fit(X,y)

print("Best model:", est.best_estimator_.get_model())

print('score:', est.score(X,y))

Completed 100% [====================]

Best model: Logistic(Sum(-0.91,0.04*Max(0.39*AIDS,0.43*AIDS,0.43*AIDS,0.52*AIDS)))

score: 0.54

plt.figure()

xs, ys = [], []

for ind in est.population_:

# use the same as the objectives

xs.append(ind.fitness.loss_v)

ys.append(ind.fitness.linear_complexity)

plt.scatter(xs, ys, alpha=0.5, c='gray', marker='+', linewidth=1.0)

xs, ys = [], []

for ind in est.archive_:

xs.append(ind.fitness.loss_v)

ys.append(ind.fitness.linear_complexity)

plt.scatter(xs, ys, alpha=1.0, c='k', marker='*', s=100, linewidth=1.0)

plt.plot(xs, ys, alpha=0.5, c='k', ls=':', linewidth=1.0)

plt.yscale('log')

plt.xlabel("Loss on validation partition (smaller is better)")

plt.ylabel("Complexity (smaller is better)")

plt.show()