Research

Our research focuses on developing machine learning methods and using them to explain the principles underlying complex, biomedical processes. We use these methods to learn predictive models from electronic health records (EHRs) that are both interpretable to clinicians and fair to the population on which they are deployed. Our long-term goals are to positively impact human health by developing methods that are flexible enough to automate entire computational workflows underlying scientific discovery and medicine.

See our publications and posts about them.

Projects

Automating Digital Health

Electrocardiograms (ECGs) are a cheap and ubiquitous measure of the electrical activity of the heart. Advances in AI have demonstrated enormous prognostic v...

Electronic fetal monitoring (EFM) is currently used in the vast majority of all hospital births in the United States to monitor the fetal heart rate. Despit...

Fair Machine Learning for Health

Improving the fairness of machine learning models is a nuanced task that requires decision makers to reason about multiple, conflicting criteria. The majori...

We are developing algorithms that can adapt to changing hospital environments in real time and make predictions that are equally accurate among patient subpo...

Interpretable Machine Learning for Health

Some AI models do not need to be explained; evidence of their reliability is enough. But when it comes to many medical applications of AI, the explainabilit...

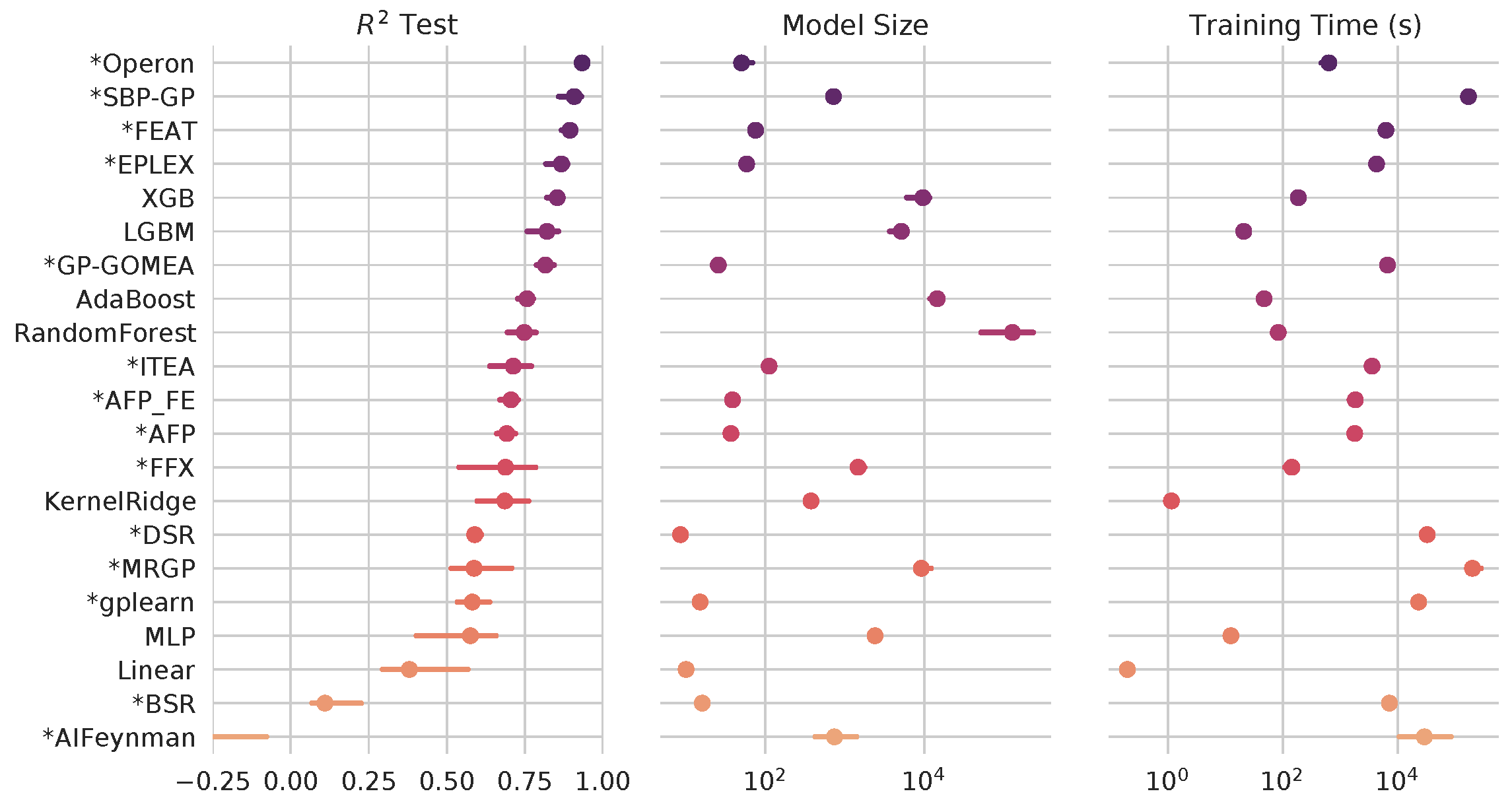

The methods for symbolic regression (SR) have come a long way since the days of Koza-style genetic programming (GP). Our goal with this project is create a ...